Sanity checking when model behaviours should overlap#

Many of the model classes in GPflow have overlapping behaviour in special cases. In this notebook, we fit some approximations to a model with a Gaussian likelihood, and make sure they’re all the same.

The models are: - GPR: Full Gaussian process regression.

VGP: A Gaussian approximation with Variational Bayes. Approximating a Gaussian posterior with a Gaussian should be exact.SVGP: a sparse GP, with a Gaussian approximation. The inducing points are set to be at the data points, so again, should be exact.SVGP(with whitened representation): As above, but with a rotation applied to whiten the representation of the process.SGPR: A sparse GP with a collapsed posterior (Titsias 2009). Again, the inducing points are fixed to the data points.GPRFITC: The FITC approximation. Again, the inducing points are fixed to the data points.

In all cases the parameters are estimated by the method of maximum likelihood (or approximate maximum likelihood, as appropriate). The parameter estimates should all be the same.

[1]:

import gpflow

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from gpflow import set_trainable

from gpflow.models import maximum_log_likelihood_objective, training_loss_closure

from gpflow.config import default_float

from gpflow.ci_utils import reduce_in_tests

%matplotlib inline

plt.rcParams["figure.figsize"] = (12, 6)

2022-09-20 14:25:01.742460: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-09-20 14:25:01.901629: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-09-20 14:25:01.901651: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2022-09-20 14:25:01.931405: E tensorflow/stream_executor/cuda/cuda_blas.cc:2981] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2022-09-20 14:25:02.830179: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory

2022-09-20 14:25:02.830245: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory

2022-09-20 14:25:02.830253: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

/home/circleci/project/gpflow/experimental/utils.py:42: UserWarning: You're calling gpflow.experimental.check_shapes.decorator.check_shapes which is considered *experimental*. Expect: breaking changes, poor documentation, and bugs.

warn(

/home/circleci/project/gpflow/experimental/utils.py:42: UserWarning: You're calling gpflow.experimental.check_shapes.inheritance.inherit_check_shapes which is considered *experimental*. Expect: breaking changes, poor documentation, and bugs.

warn(

[2]:

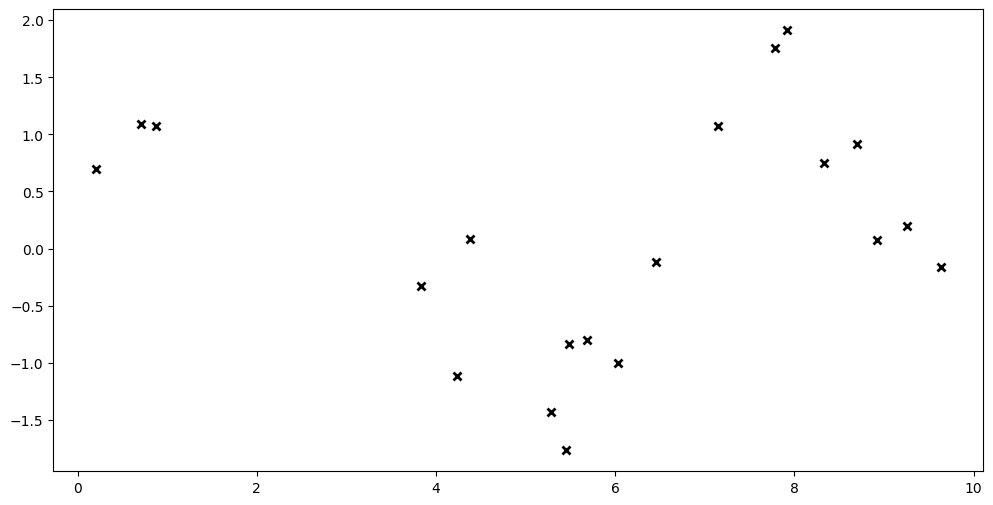

np.random.seed(0)

X = np.random.rand(20, 1) * 10

Y = np.sin(X) + 0.9 * np.cos(X * 1.6) + np.random.randn(*X.shape) * 0.4

Xtest = np.random.rand(10, 1) * 10

_ = plt.plot(X, Y, "kx", mew=2)

[3]:

data = (

tf.convert_to_tensor(X, dtype=default_float()),

tf.convert_to_tensor(Y, dtype=default_float()),

)

inducing_variable = tf.convert_to_tensor(X, dtype=default_float())

m1 = gpflow.models.GPR(data, kernel=gpflow.kernels.SquaredExponential())

m2 = gpflow.models.VGP(

data, kernel=gpflow.kernels.SquaredExponential(), likelihood=gpflow.likelihoods.Gaussian()

)

m3 = gpflow.models.SVGP(

gpflow.kernels.SquaredExponential(),

gpflow.likelihoods.Gaussian(),

inducing_variable,

q_diag=False,

)

set_trainable(m3.inducing_variable, False)

m4 = gpflow.models.SVGP(

gpflow.kernels.SquaredExponential(),

gpflow.likelihoods.Gaussian(),

inducing_variable,

q_diag=False,

whiten=True,

)

set_trainable(m4.inducing_variable, False)

m5 = gpflow.models.SGPR(

data, kernel=gpflow.kernels.SquaredExponential(), inducing_variable=inducing_variable

)

set_trainable(m5.inducing_variable, False)

m6 = gpflow.models.GPRFITC(

data, kernel=gpflow.kernels.SquaredExponential(), inducing_variable=inducing_variable

)

set_trainable(m6.inducing_variable, False)

models = [m1, m2, m3, m4, m5, m6]

2022-09-20 14:25:06.233866: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2022-09-20 14:25:06.233892: W tensorflow/stream_executor/cuda/cuda_driver.cc:263] failed call to cuInit: UNKNOWN ERROR (303)

2022-09-20 14:25:06.233911: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (55cb631c4c84): /proc/driver/nvidia/version does not exist

2022-09-20 14:25:06.234159: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

/home/circleci/project/gpflow/experimental/utils.py:42: UserWarning: You're calling gpflow.experimental.check_shapes.checker.ShapeChecker.__init__ which is considered *experimental*. Expect: breaking changes, poor documentation, and bugs.

warn(

Now, we optimize the models. For GPR, SVGP, and GPRFITC, this simply optimizes the hyperparameters (since the inducing points are fixed). For the variational models, this jointly maximises the lower bound to the marginal likelihood (Evidence Lower Bound, ELBO) with respect to the variational parameters and the kernel and likelihood hyperparameters.

[4]:

for m in models:

opt = gpflow.optimizers.Scipy()

loss_closure = training_loss_closure(m, data)

opt.minimize(

loss_closure,

variables=m.trainable_variables,

options=dict(maxiter=reduce_in_tests(1000)),

compile=True,

)

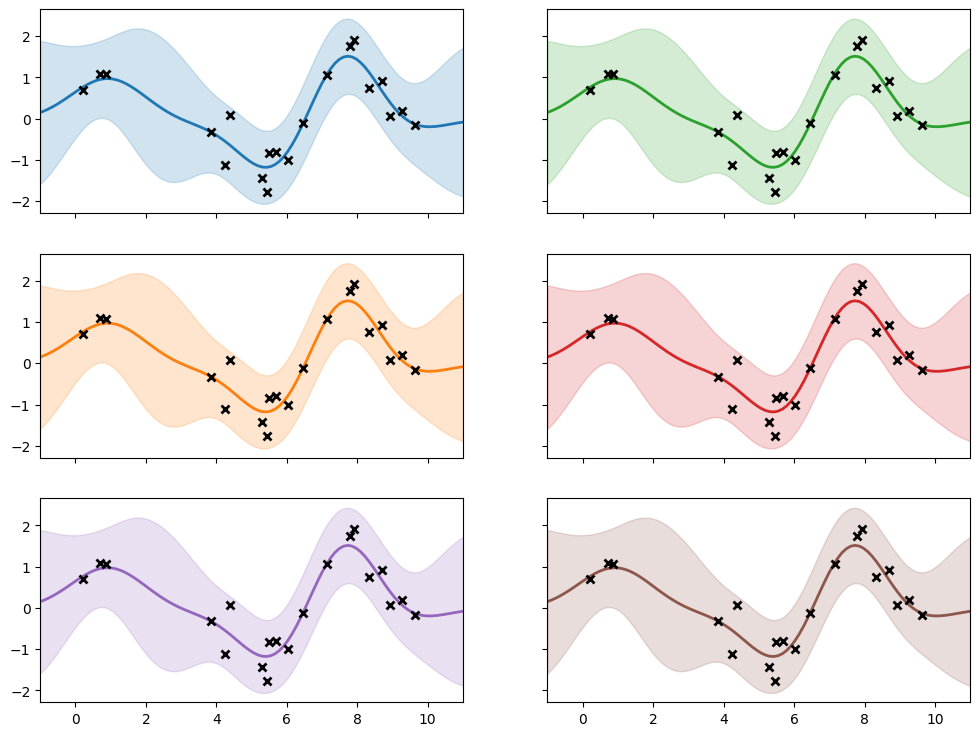

If everything worked as planned, the models should have the same:

prediction functions

log (marginal) likelihood

kernel parameters

For the variational models, where we use a ELBO in place of the likelihood, the ELBO should be tight to the likelihood in the cases studied here.

[5]:

def plot(m, color, ax):

xx = np.linspace(-1, 11, 100)[:, None]

mu, var = m.predict_y(xx)

ax.plot(xx, mu, color, lw=2)

ax.fill_between(

xx[:, 0],

mu[:, 0] - 2 * np.sqrt(var[:, 0]),

mu[:, 0] + 2 * np.sqrt(var[:, 0]),

color=color,

alpha=0.2,

)

ax.plot(X, Y, "kx", mew=2)

ax.set_xlim(-1, 11)

f, ax = plt.subplots(3, 2, sharex=True, sharey=True, figsize=(12, 9))

plot(m1, "C0", ax[0, 0])

plot(m2, "C1", ax[1, 0])

plot(m3, "C2", ax[0, 1])

plot(m4, "C3", ax[1, 1])

plot(m5, "C4", ax[2, 0])

plot(m6, "C5", ax[2, 1])

Here are the kernels and likelihoods, which show the fitted kernel parameters and noise variance:

[6]:

for m in models:

print(m.__class__.__name__)

print(f" kernel lengthscale = {m.kernel.lengthscales.numpy():.5g}")

print(f" kernel variance = {m.kernel.variance.numpy():.5}")

print(f" likelihood variance = {m.likelihood.variance.numpy():.5}")

GPR

kernel lengthscale = 1.0774

kernel variance = 0.82561

likelihood variance = 0.16002

VGP

kernel lengthscale = 1.0773

kernel variance = 0.82546

likelihood variance = 0.16002

SVGP

kernel lengthscale = 1.0774

kernel variance = 0.82549

likelihood variance = 0.16003

SVGP

kernel lengthscale = 1.0774

kernel variance = 0.82549

likelihood variance = 0.16003

SGPR

kernel lengthscale = 1.0774

kernel variance = 0.82561

likelihood variance = 0.16002

GPRFITC

kernel lengthscale = 1.0774

kernel variance = 0.82561

likelihood variance = 0.16002

Here are the likelihoods (or ELBOs):

[7]:

for m in models:

print(f"{m.__class__.__name__:30} {maximum_log_likelihood_objective(m, data)}")

GPR -18.83436136005674

VGP -18.834361541124224

SVGP -18.834400091469195

SVGP -18.834400091469195

SGPR -18.83439998906201

GPRFITC -18.834354780715604